Web scraping and web crawling are terms often used interchangeably, but they serve distinct purposes in the world of data collection. Understanding these differences is critical for businesses that rely on data from websites to make decisions.

At its core, web crawling is about discovering and indexing web pages. Search engines like Google deploy web crawlers to scan pages, follow links, and organize content so it can be retrieved efficiently. Web scraping, on the other hand, focuses on extracting specific data from websites for actionable business use.

There are approximately 1.12 billion websites globally, of which only about 17–18% are actively maintained, and hundreds of thousands of new sites are created daily[1]. Enterprises must know how to collect and organize web data efficiently gain a competitive edge.

What is Web Scraping?

Web scraping is the process of collecting data from websites automatically. It converts unstructured content from web pages into structured formats like CSV, Excel, or databases.

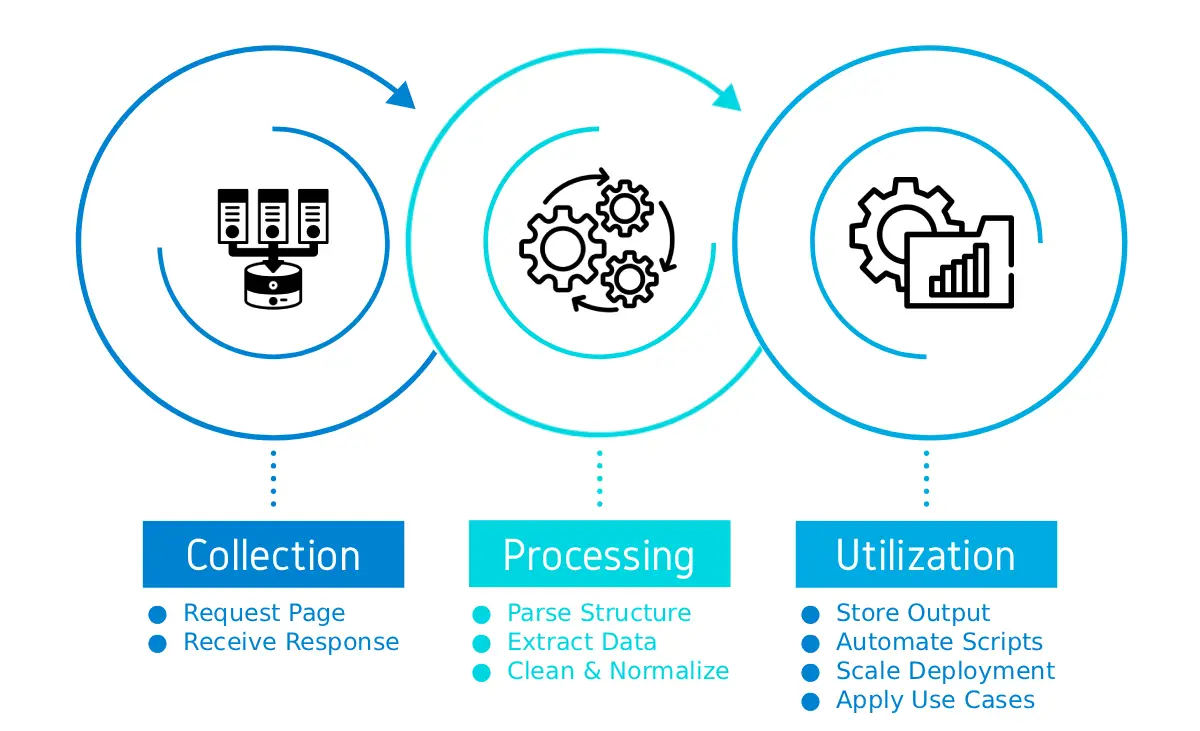

How it works

- Requesting the Web Page: A scraping program sends a request to a website server to fetch the HTML content.

- Parsing the HTML: The HTML is parsed to locate the required data fields.

- Data Extraction: The target information is extracted, cleaned, and normalized.

- Storage: The data is saved in a structured format for analytics, business intelligence, or further processing.

Technologies like Python, Scrapy, BeautifulSoup, and Selenium are widely used. Enterprise-level operations often integrate APIs and cloud infrastructure for scalability.

Examples of use cases

- E-commerce Pricing: Monitor competitor prices and product availability in real time.

- Market Research: Aggregate reviews, ratings, and trends across multiple websites.

- Job Aggregation: Extract postings from multiple platforms for recruitment analytics.

- Financial Services: Collect investment data and market metrics for modeling.

What is Web Crawling?

Web crawling is a systematic process of discovering web pages across the internet. It’s the backbone of search engine indexing and large-scale data aggregation.

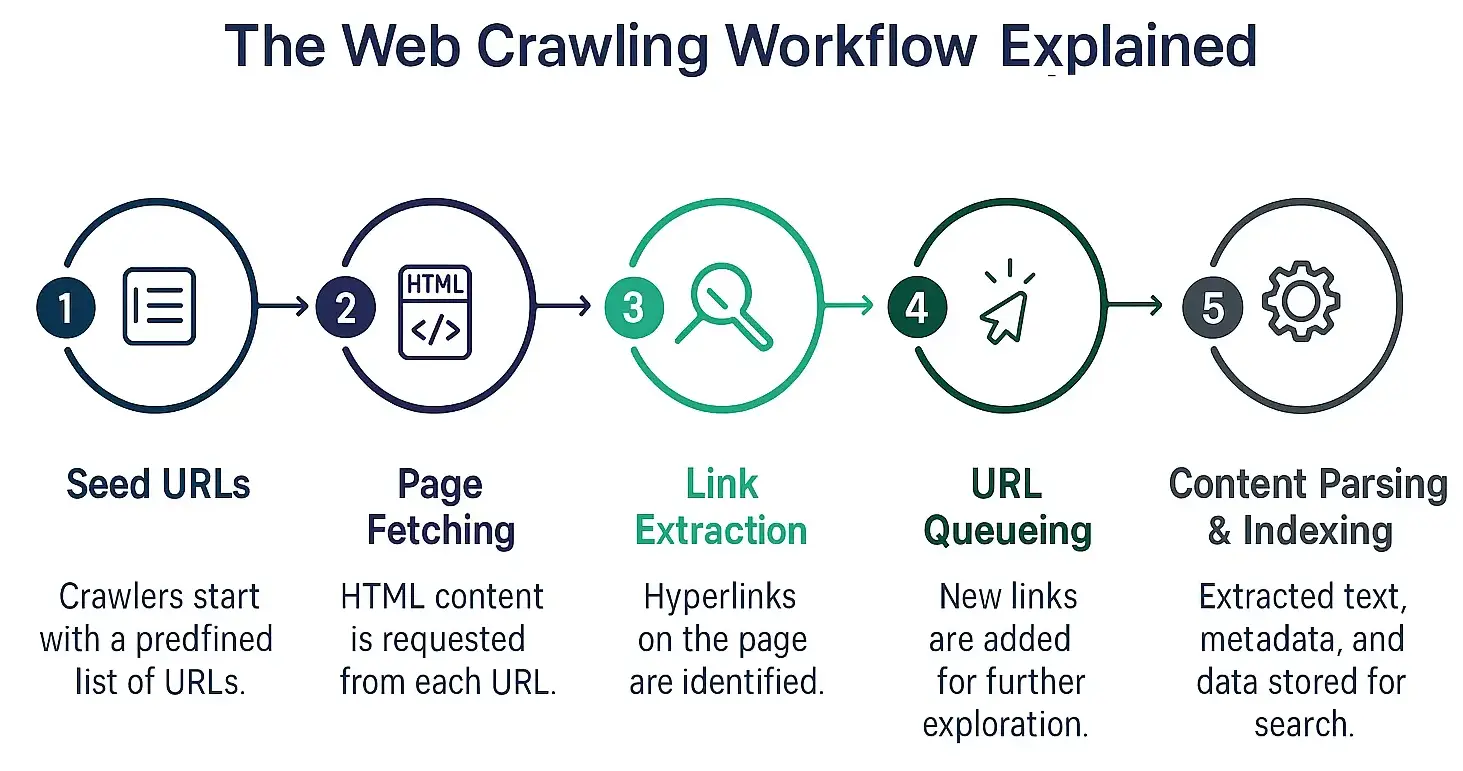

How it works

- Seed URLs: Crawlers start with a list of known URLs.

- Page Discovery: Crawlers follow links from these pages to new pages.

- Content Indexing: Each page’s content is analyzed and stored in a searchable database.

- Continuous Updates: Crawlers revisit pages regularly to capture changes.

Advanced crawlers use distributed systems to scan millions of pages simultaneously. In 2012, Amit Singhal (Google’s SVP of Search) revealed that Google indexed 30 trillion unique URLs, crawled 20 billion pages daily, and processed 100 billion monthly queries[2].

Examples of use cases

- Search Engine Indexing: Google, Bing, and Yahoo use crawlers to organize the web.

- Website Audits: Enterprises audit their own web infrastructure for SEO and compliance.

- Data Aggregation: Large datasets for AI/ML training require full-scale crawling.

- Content Discovery: Identifying new articles, blogs, or product listings.

Crawlers are optimized for breadth rather than extracting specific data points like scrapers.

Web Scraping vs Web Crawling: Key Differences

| Feature | Web Scraping | Web Crawling |

|---|---|---|

| Purpose | Extract specific data | Discover & index pages |

| Process | Parse HTML → extract → store | Traverse links → index → store |

| Output | Structured data (CSV, DB) | Page URLs, metadata, indexing info |

| Scale | Targeted websites/pages | Millions of pages globally |

| Users | Analysts, businesses, data teams | Search engines, auditors, researchers |

| Data Type | Specific fields, tables, images | Full HTML content, links, metadata |

| Technology | Scrapy, BeautifulSoup, Selenium, APIs | Distributed crawlers, bots, cloud systems |

| Frequency | Scheduled or on-demand | Continuous or periodic |

Challenges & Limitations (with solutions)

Web Scraping Challenges

1. Anti-Bot Measures: Captchas, IP bans

Websites deploy anti-bot systems to block scrapers. Captchas, rate-limiting, and IP bans disrupt workflows. Solutions include rotating proxies, captcha-solving services, and stealth scraping methods like headless browsers to mimic real user behavior effectively.

2. Dynamic Content: Pages rendered with JavaScript

Modern websites use JavaScript frameworks, making static HTML parsing ineffective. Traditional scrapers miss hidden data. Solutions involve headless browsers, JavaScript rendering engines, and adaptive frameworks that execute scripts, ensuring complete and accurate data extraction.

3. Data Consistency: Websites frequently update layouts

Frequent design or layout changes break scrapers, causing incomplete or inaccurate results. Maintaining consistency becomes difficult. Solutions include machine learning-based parsers, adaptive scraping frameworks, and monitoring pipelines to adjust automatically when websites update structures.

Web Crawling Challenges

1. Scale & Performance: Crawling millions of pages requires distributed architecture

Large-scale crawling demands massive infrastructure. Millions of URLs strain servers and storage. Solutions include distributed crawlers, cloud-based infrastructure, efficient scheduling, and prioritization of critical URLs to balance resources while maintaining crawling speed and accuracy.

2. Duplicate Content: Redundant pages slow indexing

Crawlers often fetch duplicate or near-duplicate content, wasting resources. This inflates data storage and slows indexing. Solutions include content fingerprinting, canonicalization checks, and deduplication algorithms to streamline crawling and improve efficiency in large datasets.

3. Site Compliance: Respecting robots.txt and crawl delays

Ignoring robots.txt or crawl-delay rules can overload servers or breach compliance. This risks blocking. Solutions include adhering strictly to robots.txt, configuring crawl rates, and implementing throttling to ensure ethical, responsible, and compliant crawling practices.

Ethical & Legal Considerations in Both

1. Compliance:

Respecting website rules is critical. Crawlers and scrapers should always follow robots.txt instructions, site policies, and crawl-delay limits to avoid disruption. Ignoring these guidelines risks bans, legal actions, and damaged business credibility.

2. Data Privacy:

Handling personal or sensitive information requires strict adherence to regulations like GDPR and CCPA. Enterprises must anonymize, secure, and process only necessary data. Failure to comply can lead to penalties, lawsuits, and reputational harm.

3. Intellectual Property:

Web data often includes copyrighted material such as text, images, or media. Extracting and redistributing without consent violates intellectual property rights. Responsible usage requires clear boundaries, permissions, and respect for original publishers’ ownership.

4. Enterprise Practices:

Enterprise-grade operations adopt compliance-first strategies, embedding legal checks into data pipelines. This ensures large-scale data extraction remains ethical, accurate, and sustainable. Balancing efficiency with legal responsibility builds long-term trust and mitigates operational risks.

Tools & Technologies Deafferentation

Web Scraping Tools:

Web scrapers rely on tools like Scrapy, BeautifulSoup, Selenium, and Puppeteer for extracting data from websites. These frameworks handle structured and unstructured content, automate interactions with web pages, and parse HTML effectively. Enterprise solutions combine such tools with APIs, proxy management, and real-time scheduling to ensure scalable, accurate, and compliant data extraction workflows.

Web Crawling Tools:

Web crawling requires large-scale frameworks such as Apache Nutch, Heritrix, and StormCrawler. These systems are designed for distributed crawling, managing billions of web pages, and ensuring efficient indexing web content. They support features like deduplication, scalability, and advanced scheduling. Enterprises use them for continuous crawling and scraping pipelines that integrate seamlessly with search engines and big data platforms.

How to Use Web Crawling & Web Scraping to Efficiently Collect Data for Decision Making

Web crawling and web scraping together enable enterprises to access accurate, real-time data at scale. Crawling systematically discovers relevant web pages, while scraping extracts structured information tailored to business needs. By integrating both, organizations can monitor competitors, analyze markets, and track customer trends. This unified approach supports data-driven strategies, reduces manual effort, and helps decision-makers act with speed and precision.

Partner with RDS Data for enterprise-grade web scraping and crawling solutions, backed by 35+ years of expertise and proprietary technology. Connect with us today!

Key Takeaways

- Web crawling is about discovery and indexing.

- Web scraping is about extracting actionable data.

- Both complement each other in enterprise data strategies.

- Ethical, legal, and scalable approaches are critical for businesses.

FAQs

References:

[1] Musemind. “How Many Websites Are There On The Internet In 2025?

[2] Market.us Scoop. “Google Search Statistics 2025 By Algorithm, Results, Growth”

Tired of broken scrapers and messy data?

Let us handle the complexity while you focus on insights.