In today’s data pipelines, whether Apache Spark ETL jobs, Snowflake ELT workflows, or multi-cloud lakehouses, the data catalogue serves as the essential guide holding operations together. It tracks lineage, schema changes, quality scores, and pipeline performance. Without proper catalogue management, pharmaceutical research pipelines falter: postdoc drug delivery assays become untraceable, regulatory audits fail, and high-impact publications slip away.

Drawing from years managing pipelines at MIT World Peace University, tracking polymer synthesis logs, clinical trial EDC feeds, and bioavailability models, this guide delivers 10 battle-tested practices. Expect pharmaceutical examples, practical analogies (catalogue as “pipeline GPS”), implementation roadmaps, and visual placeholders.

Why Catalogue Management Is Essential

Complex pipelines process terabytes from lab instruments (HPLC, NMR), EDC systems (REDCap), and bioreactor sensors. Poor tracking creates:

- Lineage Gaps: Cannot trace quality issues to the source

- Schema Drift: Silent column changes break ML models

- Compliance Risks: FDA/GDPR demand complete audit trails

- Debugging Delays: Hours tracing Spark failures across Airflow DAGs

One folate-conjugated polymer project lost a week to reconstructing assay lineages.

Proper practices cut MTTR by 70% and enable self-service analytics.

Best Practice 1: Implement Centralized Data Catalogues

Core Idea: Store all tracking information in one queryable system, not scattered Excel sheets.

Tools: Amundsen, DataHub, Marquez (Apache integration)

Schema: Lineage paths, tags, owners, quality metrics

Pharma Win: Tracked raw HPLC peaks through Spark scoring; auditors approved JSON exports.

Pro Tip: Airflow sensors auto-populate on DAG completion.

Best Practice 2: Automate Complete Lineage Tracking

Core Idea: Capture every transformation step automatically.

Implementation:

- Spark: SparkListener interfaces or spark-lineage libraries

- dbt: Native lineage via dbt ls –select

- Snowflake: QUERY_HISTORY + query tags

Real Example: A biodegradable polymer pipeline traced UDFs on molecular weight data, catching division-by-zero errors.

Analogy: Complete lineage = “black box recorder” for pipeline crashes.

Best Practice 3: Standardize Column Tagging & Business Glossary

Core Idea: Tag columns with clear business meaning.

Roadmap:

- Collibra/Atlan glossary: “bioavailability_score” = AUC/MRT ratio

- Auto-tagging via Great Expectations or Spark UDFs

- Query: SELECT * FROM tables WHERE tags LIKE ‘%pharma-trial%’

Pharma Impact: Unified “drug_release_profile” tags across 5 postdoc projects enabled cross-project analysis.

Best Practice 4: Real-Time Tracking Streams

Core Idea: Stream tracking data for live observability.

Stack:

- Kafka topics: lineage, quality, performance

- DataHub real-time indexing + Grafana alerts

Example: Live Spark executor metrics caught a bioreactor memory leak during 24/7 monitoring.

Best Practice 5: Schema Version Control

Core Idea: Treat schemas like code versions, review, and rollback.

Tools:

- dbt: schema.yml files in Git

- Spark: Delta Lake/Apache Iceberg enforcement

- Migration: Flyway/Liquibase for warehouse DDL

Anecdote: The postdoc added the “conjugation_efficiency” column mid-trial; Iceberg handled it seamlessly.

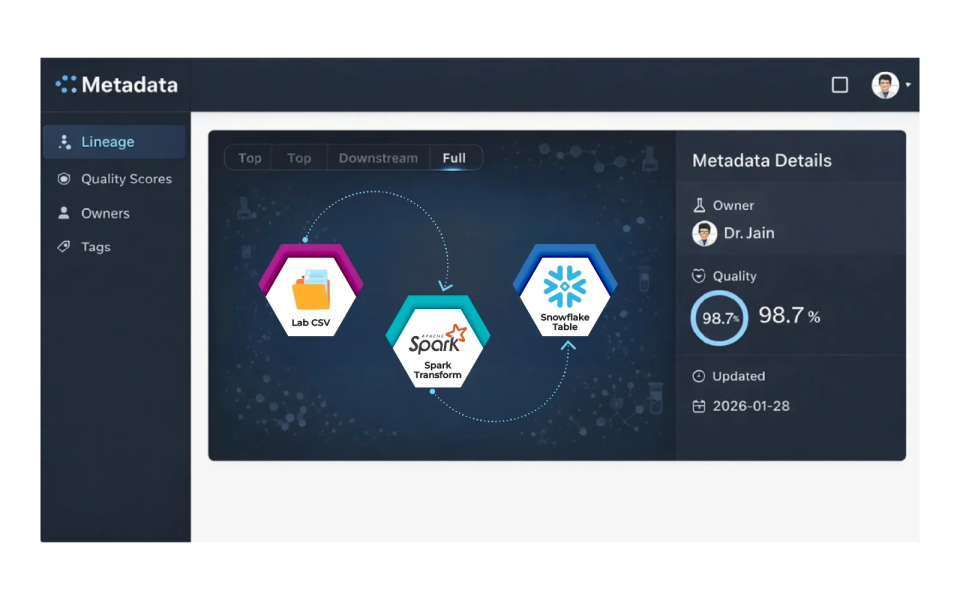

Best Practice 6: Quality Score Integration

Core Idea: Embed quality metrics as core tracking data.

Framework:

{ “completeness”: 98.7%,

“valid_molecular_weights”: “15,200/15,500”,

“freshness_lag”: “2h”}

Pharma Use: Great Expectations tests flagged failing assay batches for quarantine.

Metrics Dashboard Example:

| Pipeline | Completeness | Freshness | Last Tested |

|---|---|---|---|

| Polymer Synthesis | 99.2% | 45m | 2026-01-28 |

| Trial EDC | 97.8% | 1h 12m | 2026-01-28 |

Best Practice 7: Dataset Ownership Tracking

Core Idea: Every dataset lists the owner, SLA, and deprecation date.

Template:

dataset: polymer_assay_v2

Owner: dr.jain@mitwpu.edu

SLA: P95 < 5 min

deprecation: 2026-12-31

Enforcement: Airflow blocks the use of unowned datasets.

Best Practice 8: Cross-Platform Federation

Core Idea: Query tracking data across Snowflake, S3, and Postgres seamlessly.

Tools:

- Data Mesh: Amundsen federated search

- Trino/Presto catalog integration

- Pharma: Unified “patient_outcomes” search across EDC + lab data

Best Practice 9: Tracking-Driven Automation

Core Idea: Use catalogs to power pipelines automatically.

Examples:

- Auto-partitioning via Spark repartition

- Self-service: “Show pharma-team tables updated in the last 7 days”

- Alerting: quality_score < 95% → Slack notifications

Best Practice 10: Compliance Governance Framework

Core Idea: Make tracking data audit-ready infrastructure.

Framework:

- Policy: All pipelines emit structured tracking data

- Standards: JSON Schema validation

- 21 CFR Part 11 lineage reports

- Postdoc training programs

Regulatory Win: Passed IRB audit with complete spectra-to-statistics lineage.

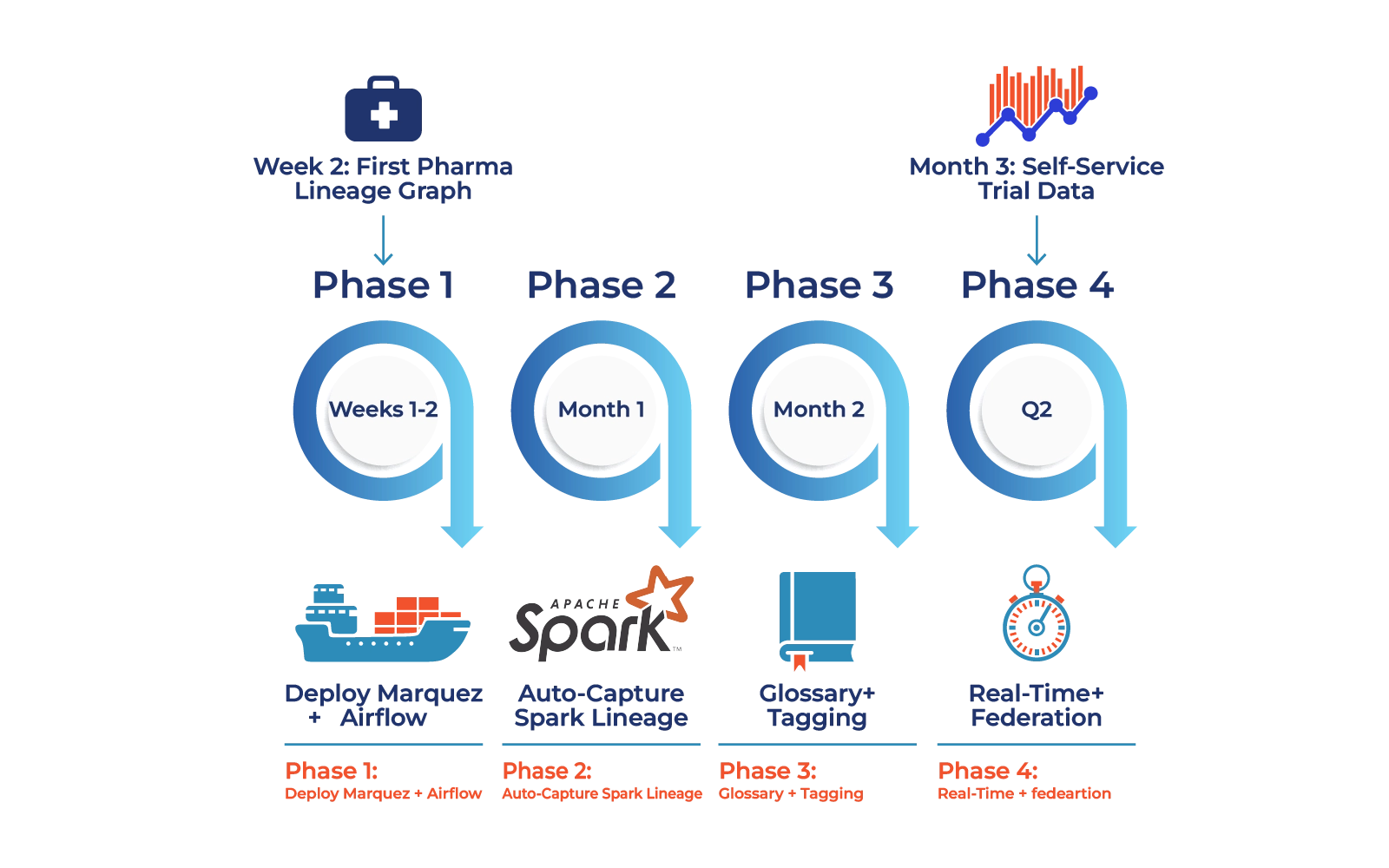

Implementation Roadmap

Phase 1 (Weeks 1-2): Marquez + Airflow integration

Phase 2 (Month 1): Automated Spark lineage capture

Phase 3 (Month 2): Business glossary + tagging

Phase 4 (Q2): Real-time streaming + federation

docker run -p 3000:3000 marquezproject/marquez

airflow DAGs trigger tracking_capture_dag

Proven Results

Teams report:

- 80% faster debugging

- 60% less compliance prep time

- 5x self-service adoption

- Zero production data mysteries

Drug delivery research shifted from reactive firefighting to strategic analysis across postdoc projects.

What’s your biggest pipeline tracking challenge? Share below.

FAQ: Managing Data Catalogues in Complex Pipelines

Lineage, schemas, quality scores, and data owners.

Marquez – Best for Spark lineage.

DataHub – Enterprise-grade metadata platform.

Amundsen – Powerful search & discovery focus.

spark-submit --conf spark.sql.queryExecutionListeners=marquez.listeners.SparkListener

Complete lineage enables 2-hour audits instead of weeks.

Iceberg or Delta Lake prevents silent column breaks.

Spark → Kafka → Live dashboard pipeline.

{"completeness": 99.2%, "freshness": "45m"}

By Week 2: 50% faster debugging.

Airflow blocks unowned datasets automatically.

Marquez + Great Expectations + dbt (Week 1 setup).

Saurabh Tikekar | Data Engineer

Tired of broken scrapers and messy data?

Let us handle the complexity while you focus on insights.