Imagine you’re a chef prepping a gourmet meal. You wouldn’t toss raw ingredients straight onto the plate, muddy carrots, wilted greens, and all. No, you’d wash, chop, and season them first. In the tech world, clean data delivery works the same magic. It’s not just about delivering raw files. It’s about providing clean, ready-to-use data that clients can work with immediately, without constant back-and-forth. The payoff? Drastically shorter revision cycles, happier clients, and a reputation as the go-to data wizard.

But why does this matter in 2026’s data deluge? Let’s unpack it step by step, with real stakes and solutions.

The Hidden Cost of “Good Enough” Data

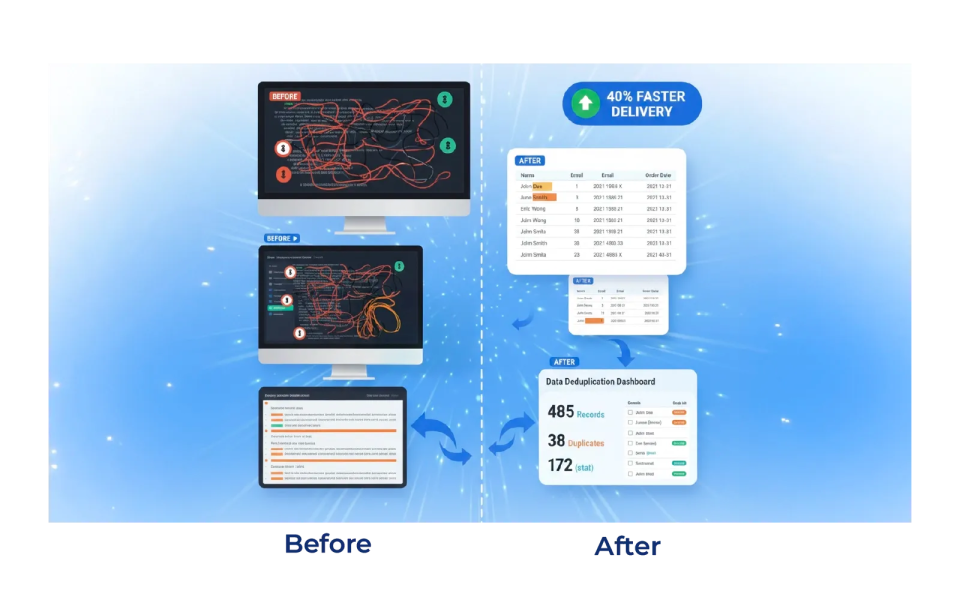

Picture this: Your team spends weeks crunching client datasets for a marketing dashboard. You deliver it—boom. But the client spots inconsistencies: duplicate entries, missing timestamps, and units in the wrong format (meters vs. feet, anyone?). Suddenly, it’s ping-pong city. “Fix this field.” “Regenerate that report.” Revisions drag on for days or weeks, burning billable hours and goodwill.

Industry stats paint a grim picture. According to Gartner, poor data quality costs businesses an average of $12.9 million annually in rework alone. In client services, this manifests as revision cycles ballooning by 3–5 times longer than necessary. Clients ghost you, churn spikes, and your NPS tanks.

The villain? “Dirty” data—incomplete, inaccurate, or unstructured info that sneaks through pipelines like uninvited party crashers.

What Clean Data Delivery Really Means (And Why It’s Your Superpower)

Think of clean data delivery as your invisible force field in client projects – quietly neutralizing threats before they erupt. It’s a systematic process transforming raw chaos into client-ready gold, blending automation, smarts, and foresight. Far from a one-off scrub, it’s a repeatable engine that turns data headaches into competitive moats.

Let’s dissect the core pillars, like layers of a high-tech onion (minus the tears):

- Validation at Every Step: Gatekeepers scan incoming data in real time. Regex catches bogus emails; statistical models flag outliers (e.g., a coffee shop logging $1M daily sales?). Red flag!). Tools like Great Expectations run 100+ tests per dataset, rejecting filth before it spreads.

- Standardization Magic: Force chaos into harmony, ISO 8601 dates everywhere, currencies in USD base, and snake case columns. Pitfall alert: Skipping these invites “format wars,” where clients waste hours reformatting your gift.

- Enrichment Without the Hassle: Don’t just clean, elevate. Auto-geocode addresses, infer missing categories via ML, or fuse with public datasets (e.g., weather impacting retail sales). Clients feel the “wow” factor.

- Delivery in Client-Speak: Mirror their world, RESTful APIs for devs, interactive Looker dashboards for execs, or S3 exports for data scientists. Pro move: Include a one-pager schema and sample queries.

Mini Case: Retail Insights Co. adopted this for supplier data. Pre-clean: 18% error rate, 10-day revisions. Post: 0.5% errors, 1-day handoffs. Revenue per client jumped 35% from upsells.

Why the superpower? Forrester reports 55% faster time-to-value and 62% revision reduction. It frees your team for high-value work, boosts retention (clients hate friction), and sparks referrals. Common trap? Over-relying on manual checks—automation scales it.

Quick-Start Checklist:

- Profile data sources (e.g., via Pandas profiling).

- Define 10 golden rules (e.g., no nulls in key fields).

- Test on a sandbox project.

Master this, and you’re not delivering data—you’re delivering dominance.

Real-World Wins: From Revision Hell to Client Raves

Let’s get concrete. Take Acme Analytics, a mid-sized firm serving e-commerce brands. In the pre-clean data era, revisions ate 25% of project time. They implemented automated cleaning via tools like Great Expectations and dbt-bam. Average cycle time plummeted from 14 days to 3. One client testimonial? “It’s like you read our minds. No tweaks needed—we launched the campaign on Day 1.”

Or consider HealthTech Innovators. Messy patient datasets led to compliance nightmares and endless clarifications. Post-clean delivery? FDA audits passed flawlessly, revisions halved, and partnerships doubled.

Pro Tip: Start small. Pilot clean delivery on one client vertical. Track metrics like revision tickets (aim for less than 10% of projects) and time-to-insight.

The Tech Stack to Make It Happen

No PhD required. Here’s your starter kit:

| Tool | Superpower | Best For |

|---|---|---|

| Pandas/Polars | Lightning-fast cleaning in Python | Internal ETL pipelines |

| Great Expectations | Automated validation tests | Ensuring "no surprises" |

| dbt | SQL-based transformations | Scalable data modeling |

| Monte Carlo | Real-time anomaly detection | Enterprise monitoring |

| Airbyte | Seamless, clean API delivery | Client-facing exports |

Integrate these into CI/CD, and you’re golden. Bonus: AI agents now auto-generate cleaning scripts from natural language prompts.

Your Action Plan: Clean Data, Shorter Cycles, Bigger Wins

Ready to level up?

- Audit Your Pipeline: Run a data quality scan this week, what’s your dirt score?

- Automate Ruthlessly: Set up validation gates before delivery.

- Measure and Iterate: Track revision volume pre/post. Share wins with clients to build trust.

- Scale Thoughtfully: Train your team; evangelize internally.

In a world drowning in data, clean delivery isn’t optional—it’s your edge. Clients won’t just stick around; they’ll advocate for you. What’s your first move?

FAQ: Clean Data Delivery Quick Hits

For most teams, a pilot takes 1–2 weeks using open-source tools. Full rollout typically takes 1–3 months, with ROI kicking in immediately through fewer client revisions.

Governance sets long-term rules like policies and ownership. Clean delivery is the tactical execution — hands-on scrubbing, validating, and packaging data for today’s client handoff.

Absolutely. Free tools like Pandas and Great Expectations handle up to 80% of needs. You can scale to paid solutions only as data volume grows — no heavy upfront investment required.

Track success using key metrics like revision rate (under 5% of deliveries), data accuracy (above 99%), and client feedback through a 20+ point NPS improvement. Most tools auto-report these metrics.

No. AI speeds up the process by automatically fixing up to 70% of issues, but humans still define validation rules and manage edge cases such as business logic nuances.

Namratha L Shenoy | Data Engineer

Tired of broken scrapers and messy data?

Let us handle the complexity while you focus on insights.