The integration of Artificial Intelligence (AI) technologies into data processing systems has significantly enhanced their capabilities. However, the belief that AI has replaced the need for traditional web scraping technologies is not correct.

For businesses that often depend on web data, like e-commerce websites, market researchers, or supply chain businesses, AI’s role in scraping must be understood. The process of web scraping has not been dismissed; rather, its core components have been optimized.

AI technologies can be applied to certain aspects of web scraping, such as to the analysis or normalization of the data acquired.

The primary focus of this article is the most prevalent myths of AI and web scraping, as well as the strengths and limitations of AI to explain why a hybrid solution in a managed web scraping service is the best for effectiveness and efficiency.

Understanding AI Role in Web Scraping

Recent developments in large language models like ChatGPT have created significant buzz around AI, leading to the assumption that AI has the capability to completely replace conventional web scraping methods.

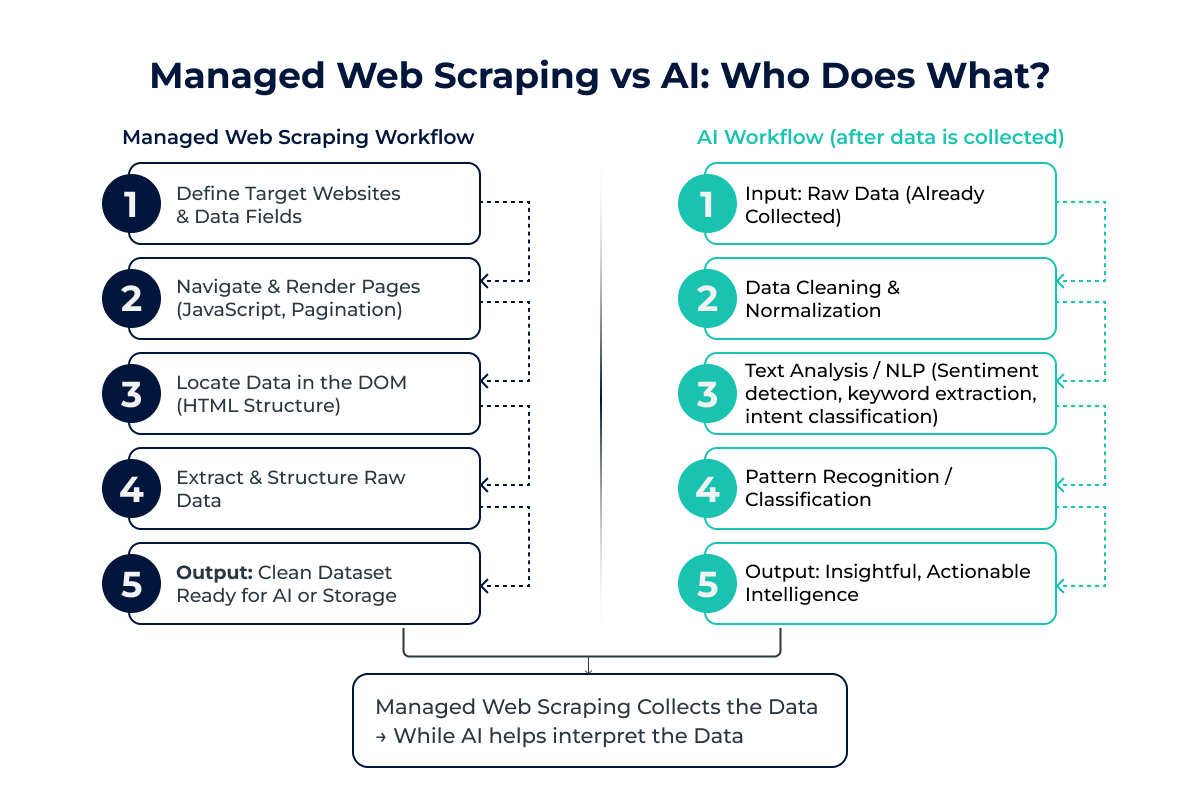

However, there is a clear distinction between scraping and AI. Scraping is essentially browsing through websites and retrieving real-time data in raw form from the Document Object Model (DOM).

On the other hand, AI specializes in analyzing that information and revolves around extracting information such as determining sentiment and normalizing text. Misunderstanding the difference can lead to inefficient data strategies.

A managed scraping service merges the accuracy from traditional scraping and the analytical capabilities of AI to provide accurate and dependable data that can be acted upon.

Myth 1: AI Has Replaced Traditional Web Scraping

The Misconception

The emergence of AI has made traditional web scraping redundant, asserting that tools such as LLMs can pull data from websites autonomously without the need for web crawlers.

The Reality

AI-driven models including GPT specialize in natural language processing and do not specialize in browser-based tasks. AI models, such as GPT, are not able to do the following:

i). Navigate DOM Structures: The process of scraping demands parsing through HTML and JSON to fetch elements like review text and product prices. This is not possible for AI models.

ii). Handle JavaScript Rendering: A large number of websites are built using frameworks like React and Angular, leading to the need for headless browsers like Puppeteer or Playwright to render dynamic content.

iii). Bypass Anti-Bot Measures: CAPTCHAs, IP rate limiting, and behavioral analytics such as mouse movement tracking effectively prevent AI scraping without dedicated scraping infrastructure.

Traditional scraping frameworks, built with tools like Scrapy and Selenium remain indispensable for building scraping infrastructure to gather raw data for AI to analyze and process insights.

As an illustration, an AI-driven e-commerce price monitoring system needs preprocessing raw data through scraping competitor price data first before analyzing and comparing in-depth pricing trends.

Myth 2: Websites can be scraped at will through AI

The Misconception:

AI’s ability to understand context leads some to believe it can automatically scrape any website without manual configuration.

The Reality

The range of website types spans static HTML to advanced JavaScript single page applications (SPAs) and includes proprietary anti-scraping technologies such as reCAPTCHA, bot detection, and others. AI is unable to:

i). Adapt to Different Layout: Extraction Automation uses specific selectors such as CSS or XPath geared to that website’s markup. The bot’s browser model needs to be able to scan and recognize each website’s document object model (DOM) tree and is embedded.

ii). Deal with Active Browsing Tasks: Interaction-driven Dynamic Content: Things such as pop-up windows, continual exposure to new content need automation that is event-driven.

iii). Avoid Capture: AI is unable to do such actions as rotating proxies or emulating real users behavior such as random human mouse movement behavior.

As an example, scraping Amazon product pages involves dealing with pagination and CAPTCHAs, which requires traditional scraping methods. AI can assist with post-extraction tasks, such as summarizing product descriptions, but only works with scraping frameworks that provide data.

Myth 3: AI Makes Web Scraping Cheaper and Faster

The Misconception

The claim of AI making web scraping quicker and more efficient is rooted in the belief that it eliminates the need for advanced infrastructure.

The Reality

As with everything else in the world, web scraping comes with its own costs, including time and money. While some AI technologies can enhance efficiencies, they do not affect the these bottom lines.

i). Infrastructure Costs: As always, scraping requires proxy networks, cloud servers, as well as headless browsers, which are vital for scaling and dealing with anti-bot measures.

ii). Operational Complexity: Complex tasks such as rate-limiting, retry logic, and session management will always be time heavy, no matter the level of AI use.

iii). AI Overhead: Costs associated with running AI models, such as for NLP or anomaly detection, can result in an increase of costs that is simply not worth it.

Take for example, a retailer scraping job listings. AI will only be of use for post-processing tasks, such as role categorization. However, the backbone of the scraping job is the infrastructure and that is what is most efficiently augmented by AI.

Myth 4: AI Eliminates the Need for Developers

The Misconception

The claim of no-code AI-powered scraping tools comes as an advertisement, largely aimed at businesses that seek to eliminate a developer from the equation altogether.

The Reality

Even AI-augmented scraping needs human expertise to supervise:

i). Configure Targets: Specify and outline the data fields (title, price etc.) and data sources, using CSS selectors or XPath.

ii). Validate Outputs: Assess the accuracy and completeness of the scraped data, resolving issues such as duplicates or missing fields.

iii). Manage Anti-Bot Defenses: Maintain access using proxy rotation, behavioral emulation, and CAPTCHA solvers.

iv). Ensure Compliance: GDPR, CCPA, and site ToS require audit trails and ethical scraping, ensuring documentation and compliance is kept.

As an example, consider a supply chain manager scraping data from supplier portals. Even with AI, they require developers to set up and supervise scraping pipelines. Developers are still important to orchestrate hybrid systems and manage data fidelity.

Where AI Truly Shines in Web Scraping?

AI does not eliminate scraping, but does improve certain processes in the extraction phase:

i). Text Cleaning: Normalization of data and standardization of product names across sources.

ii). Sentiment Analysis: Classifying customer reviews as positive or negative for ecommerce.

iii). Data Categorization: Grouping products through clustering algorithms into “electronics” vs “appliances”.

iv). Anomaly Detection: Scraped data outliers, for example, a sudden decrease in price.

v). Translation and Normalization: Unifying schemas for multilingual data or data in differing formats.

As an example, an AI-enabled marketplace scraping competitor reviews can process sentiment analysis, which can help inform product strategies. These operations need traditional scraping to first gather structured data.

Where Traditional Scraping Remains Essential

The unmatched traditional scraping frameworks are best suited for application in areas that need accuracy and robustness:

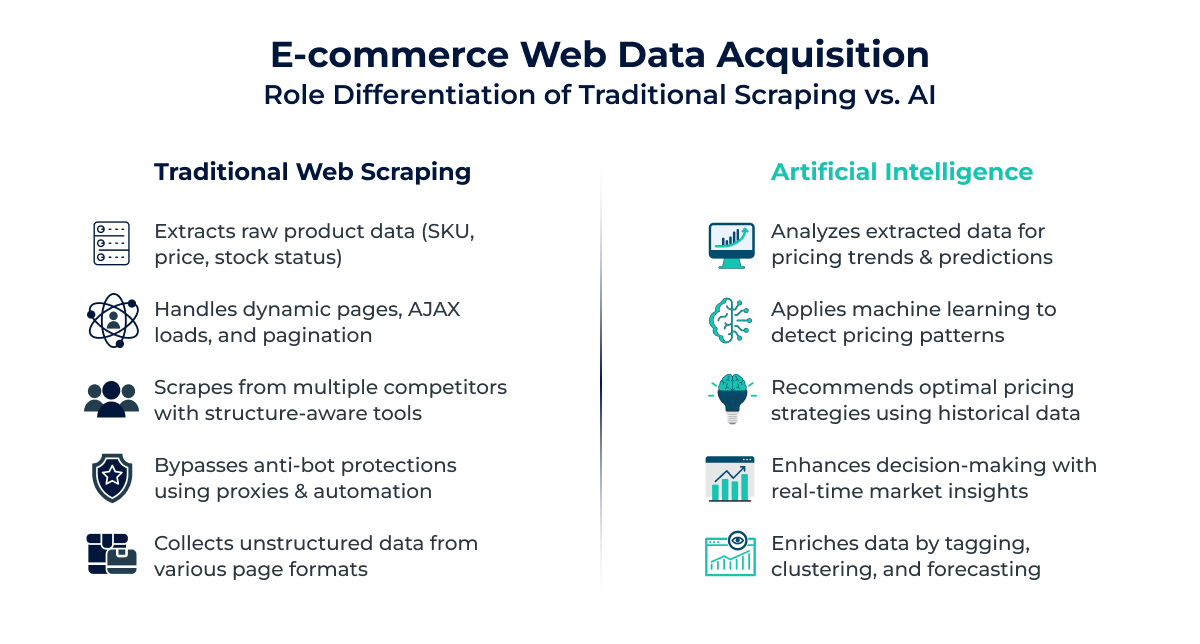

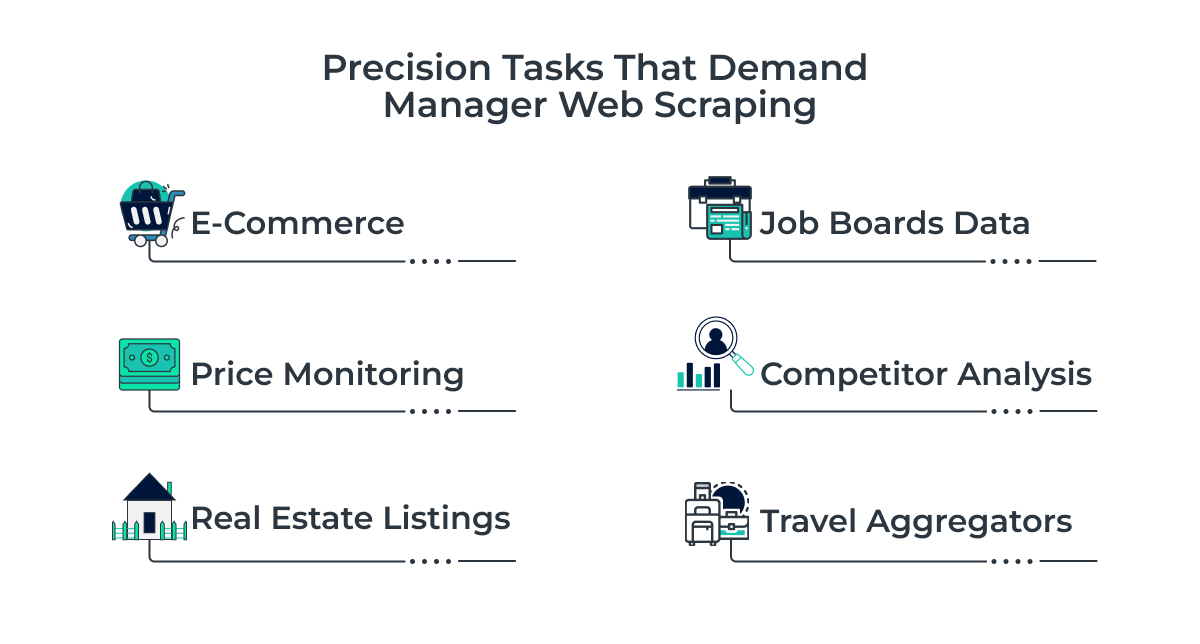

i). E-commerce Catalogs: Automated retrieval of product information such as SKUs and prices from dynamic, paginated pages.

ii). Price Monitoring: Automated retrieval of real time prices from Amazon and other marketplaces, overcoming rate limits.

iii). Real Estate Listings: Automated retrieval of property data from frequently restructured websites.

iv).Job Boards: Automated retrieval of listings from multiple, differently structured single or multi-platforms.

v).Competitor Analysis: Covert automated strategy tracking without triggering bans through stealth scraping.

These use cases require Scrapy for crawling, Selenium for browser automation, and proxy networks for evasion and other routines AI cannot duplicate.

The Hybrid Model: Combining Scraping and AI for Success

The optimal method for data acquisition is a combination of traditional scraping and AI analytics:

i). Crawlers: Use anti-bot frameworks for webpage navigation and raw HTML or JSON extraction.

ii). Extractors: Use of CSS selectors and XPath to extract particular fields such as dynamically rendered prices and titles.

iii). Machine Learning: AI Processing for sentiment analysis, categorization, and anomaly detection.

iv). Orchestration: Automated system overseen by DevOps for enforced managed scaling, retries, and compliance.

For instance, if a retailer scraping competitor sites, he can employ AI-powered categorizers to detect anomalies in pricing after crawler collection of product data. Enriched data is delivered without the hassle of in-house arrangements via hybrid pipelines under the care of managed services like RDS Data.

How a Managed Service Enhances Web Scraping?

After the managed web scraping services myths are sorted, AI-integrated traditional scraping can be tailored to specific needs, thus serving as a strategic solution. Here’s how:

i). Seamless Data Extraction: Anti-bot measures such as javascript rendering, DOM structure traversal, CAPTCHA bypassing are enabled through proxy networks and advanced crawlers such as Scrapy and Playwright. An ecommerce scraper, for example, can extract competitor product price listings from multiple sites simultaneously without the risk of IP banning.

ii). AI-Powered Enrichment: AI-enhanced data enrichment applies to sentiment analysis based to extract and analyze consumer and product reviews and product listing and kategorouization, rendering the data actionable. Role classification from scraped job board data is an example of AI enrichment for managed services automating processes previously taken up by human work.

iii). Scalable Infrastructure: During peak demand, performance consistency is guaranteed by cloud-based distributed systems for large-scale scraping spanning hundreds of sources. This enables real-time monitoring of markets without the hassle of in-house infrastructure scaling.

iv). Compliance Assurance: Managed teams incorporate ethical mechanisms such as rate limiting and audit trails in alignment with GDPR and CCPA. For instance, a supplier can scrape vendor data as long as no personal data is captured, mitigating legal exposure.

v). Expert Oversight: Specialized teams set up goals, check outputs, refine pipelines, and do not require any in-house developer. A retailer is assured that experts maintain stealth scraping of competitor catalogs, ensuring continuous data availability.

Through a partnership with a managed service provider like RDS Data, companies gain access to a hybrid scraping ecosystem that integrates the technical precision of scraping and the analytical power of AI, promoting efficiency and company growth.

Conclusion

AI undoubtedly enhances data analysis and enrichment. However, it does not seem capable of replacing the foundational role of traditional web scraping in the acquisition of raw, organized data. A combination of the two—AI and scraping infrastructure—produces the most dependable results. Preconfigured services such as RDS Data offer modern data-centric companies scalability and precision while meeting legal and regulatory frameworks.

Ready to construct a smarter and AI-powered web scraping pipeline?

Contact Us and get a complimentary consultation to learn how our managed services can provide deep enriched data you can trust, designed specifically for your requirements.

Tired of broken scrapers and messy data?

Let us handle the complexity while you focus on insights.